An experiment with GPT-4 vision and Excalidraw

Building flowcharts online is often tedious, and the UX of specific chart tools can hinder ideas. Sketching on paper is often the quickest way to dump a thought or bring a basic flow to life. However, once drawn, sharing or modifying a sketch is not easy. In this article, I detail how I attempted to resolve this pain point by combining the power of paper, GPT-4 vision, and Excalidraw.

The problem

One of the coolest pieces of web-based software I have encountered in the past few years is Excalidraw. I thought it was just done right from the beginning of using it. It is great value for money (basically free), intuitive, lightweight, and smart, primarily because it allows users to save files locally easily. It's not often that we get to manipulate files and store them however we want these days!

In addition, Excalidraw is a very neat software because it's the closest thing to simple hand-drawn flowcharts. I rely on Excalidraw a lot, leaning on the visual side of the thinking spectrum. Nothing beats a quick Excalidraw for high-level flowcharts outside of pen and paper.

However, I frequently reproduce hand-drawn flowcharts in Excalidraw to spare my colleagues from my handwriting or to keep a digital version I can return to. This feels inefficient, and with today's technology, there must be a simple way to improve this workflow.

This led me to wonder whether there was a fun way to connect a few tools and port hand-drawn flowcharts to a digital format.

Like the whole tech industry, I had been tinkering with gpt-3.5-turbo and gpt-4 quite a

lot in 2023 for text generation. Through these experiments, I became

aware of the lesser-known gpt-4-vision-preview model, though I had never

used it until this project. Using it in production is probably a

stretch, but for a proof of concept, it was worth giving it a go and

seeing if I could use this model to generate an Excalidraw flowchart

with minimal effort. Of course, I needed a few things in between to make

it work.

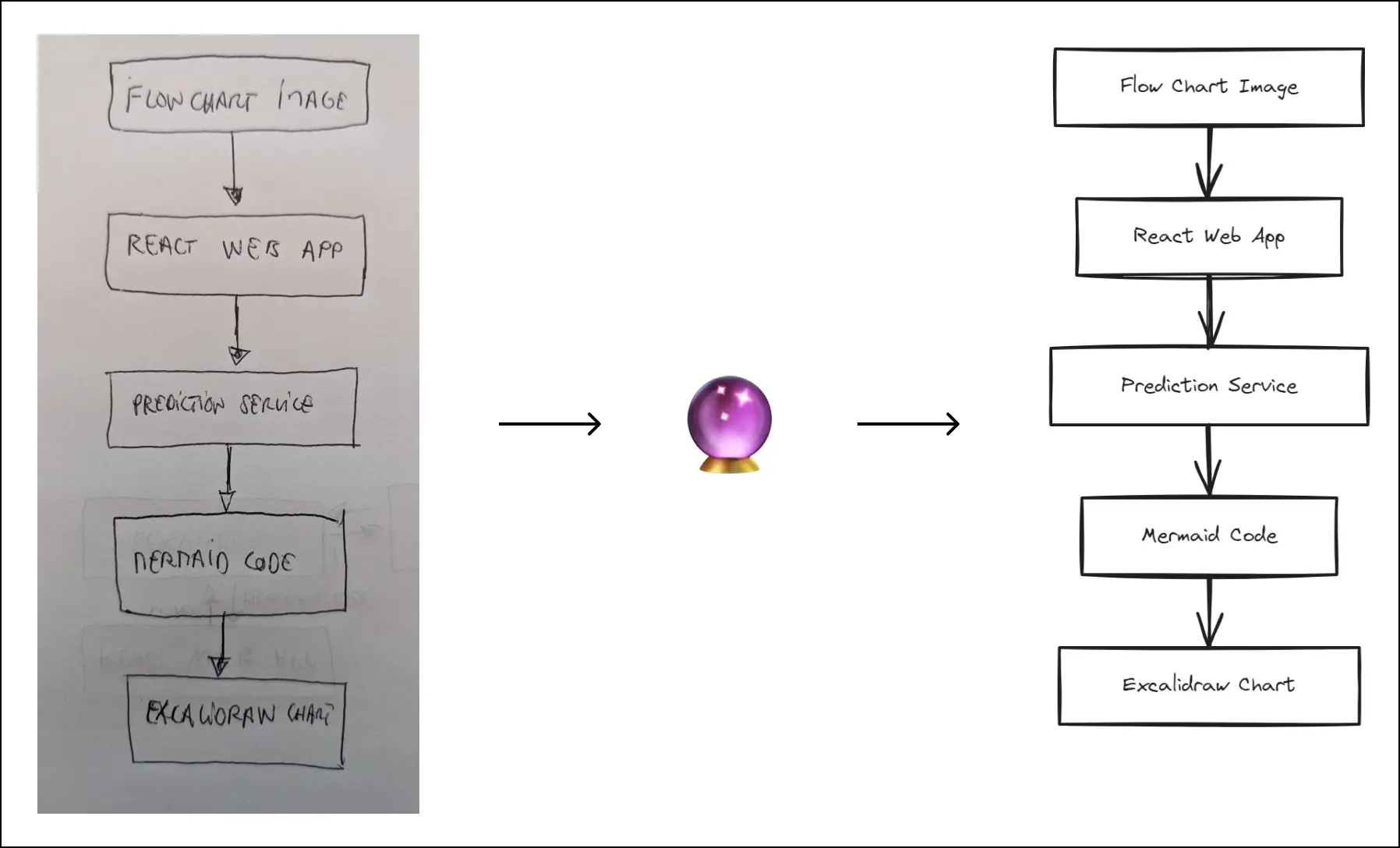

Putting it together

For this experiment to work, I needed a way to get images to the model

and then, a way to generate the Excalidraw charts. To my knowledge,

there is no direct way to produce Excalidraw charts programmatically. I

needed to find one that both gpt-4-vision-preview and I could know and use — it

couldn't be something too obscure or too new. That's where Mermaid comes

into play. Widely used and well documented, but more than anything, the

Excalidraw team had published a mermaid-to-excalidraw npm package to

integrate Excalidraw into web apps. With that sorted, I only had to:

- Get an image using a web app

- Send the image to

gpt-4-vision-preview - Prompt the model to return a valid mermaid code

- Return that code to the web app

- Convert the mermaid code to an Excalidraw chart

- Display the result in an Excalidraw editor

Plain and simple! What could go wrong?

Based on my short experience running long-lived API calls to OpenAI from a Vercel-hosted Next.js app, I knew there were quite a few limitations there. To avoid any problems, I decided to implement a dedicated service, available through an async API.

Simplifying my life client-side came at a cost, though. Implementing an async API with FastAPI or Flask (on this project, I went with FastAPI) requires a little more work because Celery needs a result backend. And usually, this means Redis. So, my simple implementation became a bit more complex.

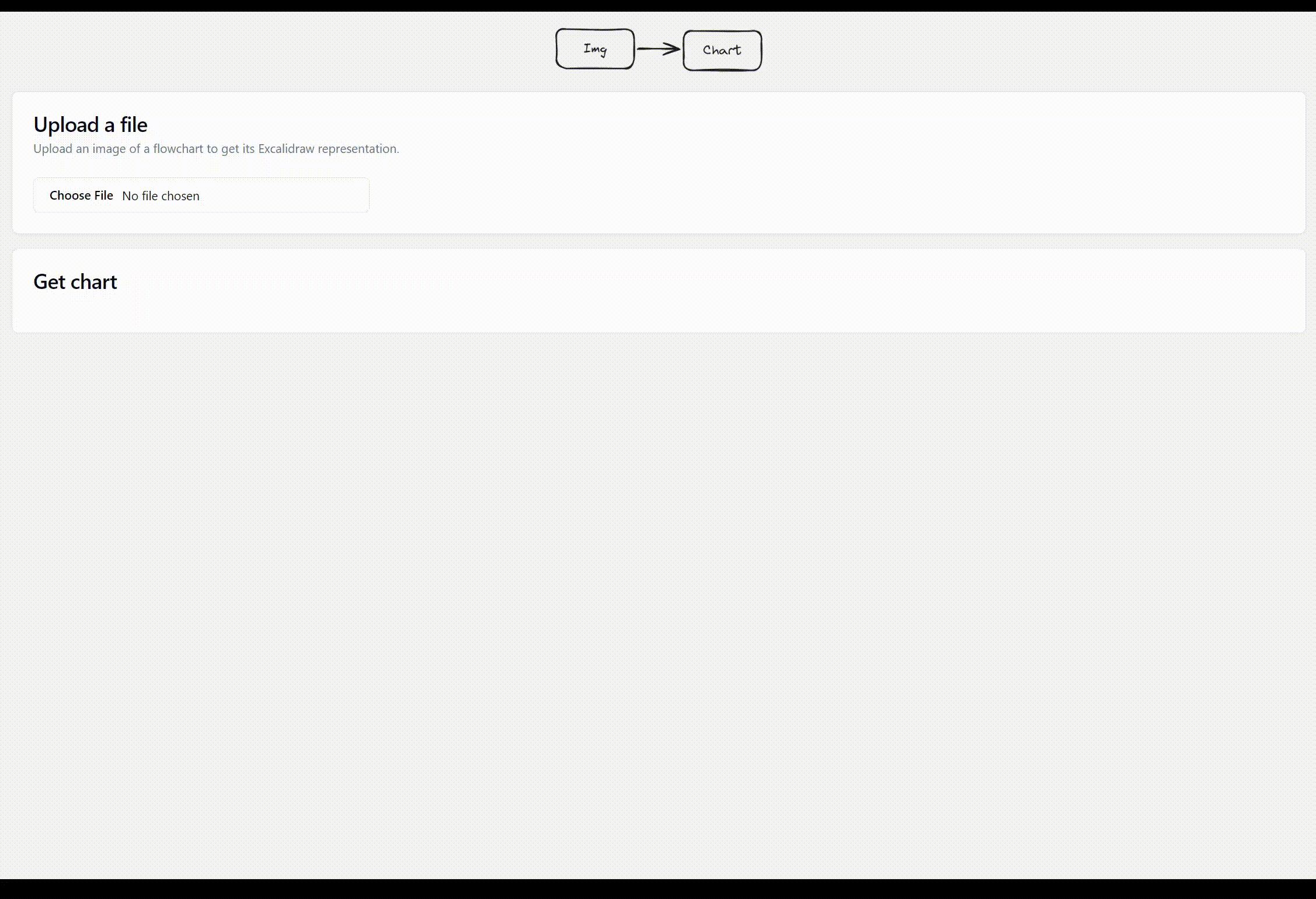

All that, without considering the many issues I faced trying to make Excalidraw play nice with Next.js.

Next is my goto js framework (hence the choice. You know the saying,

chose boring technology!), but the default server-side generation was a

pain. I tried to circumvent this using the workaround relying on dynamic

imports mentioned in the @excalidraw/excalidraw docs. It was kind of

working, but all my builds were failing. So... It wasn't working at all, in

fact. My fallback (after hours trying to make it work) was to switch to

using plain React, under the assumption, later confirmed, that it would

work much nicer and that this was good enough for a POC. I felt less

cool, but relieved.

In the end, I settled with:

- A React client for upload and results. Deployment on Vercel.

- A FastAPI backend for processing. Deployment on Fly.io with Upstash Redis as Celery result backend.

- Mono repo with continuous deployment via GitHub actions.

On the AI side:

- I started with

gpt-4-vision-previewand switched togpt-4-turboas I wrote this article since the model is now out of preview (note: all this was done beforegpt-owas announced) - I used LangChain mainly to facilitate the chaining of cleanup functions and to easily switch models.

Using LangChain with gpt-4-vision-preview wasn't a walk in the park,

mainly because of the limited documentation about that particular model.

Given the project's size, I could have gone without it, but it will

become handy for future iterations.

The result

Due to the unpredictable nature of LLMs, the lean nature of my code, and the chaining of multiple tools, I had low expectations, but it's working... with some caveats!

- Most of the time, the app returns an acceptable flowchart that can be easily fixed in the Excalidraw editor.

- Sometimes, it gets it spot on, which feels pretty magical.

- Some other times, it gets it so wrong that it's frustrating and less helpful.

In other words, your typical LLM experience.

Demo

This is a real-time demo (you'll enjoy the long response time) taken on the deployed app with an actual sample (sample #3 shown later). In the first seconds, I select the image from my Explorer, which is not shown in the gif. The processing starts when the skeleton and spinner show up.

I know this gif isn't perfect, and I'll be working on a more detailed video later on.

Sample inputs and outputs

In this section, let's review how inputs compare with outputs, and what mermaid code was generated by the model. I know what type of graphs work, so I didn't go too far when drawing the inputs, but all the outputs are first shots, no bullshit!

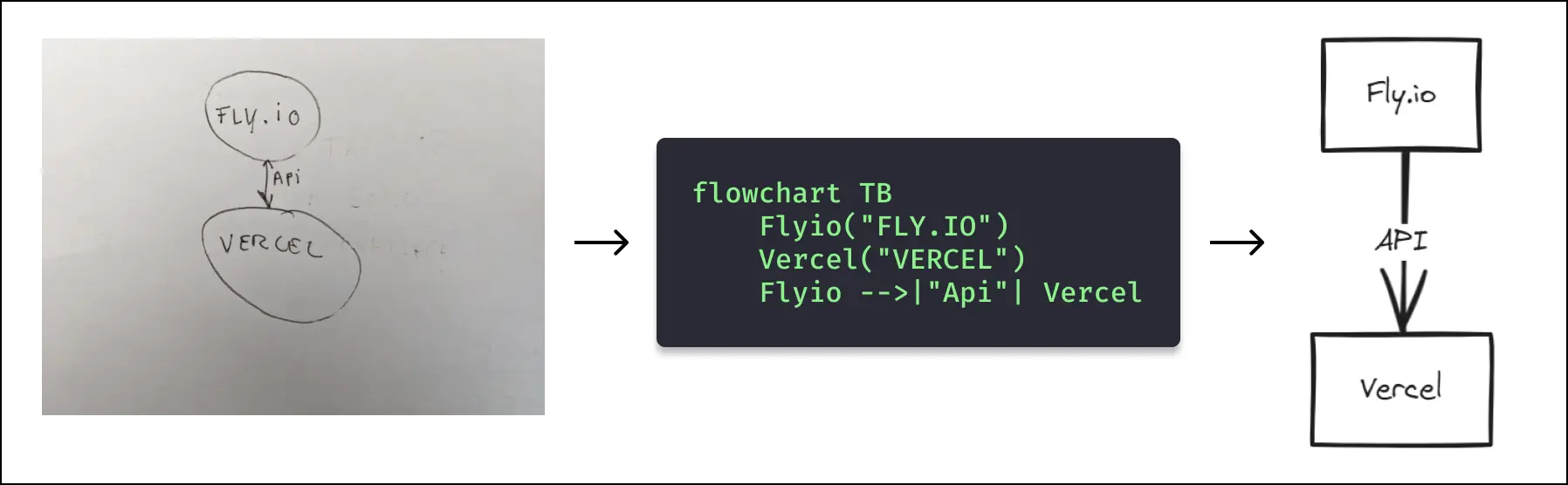

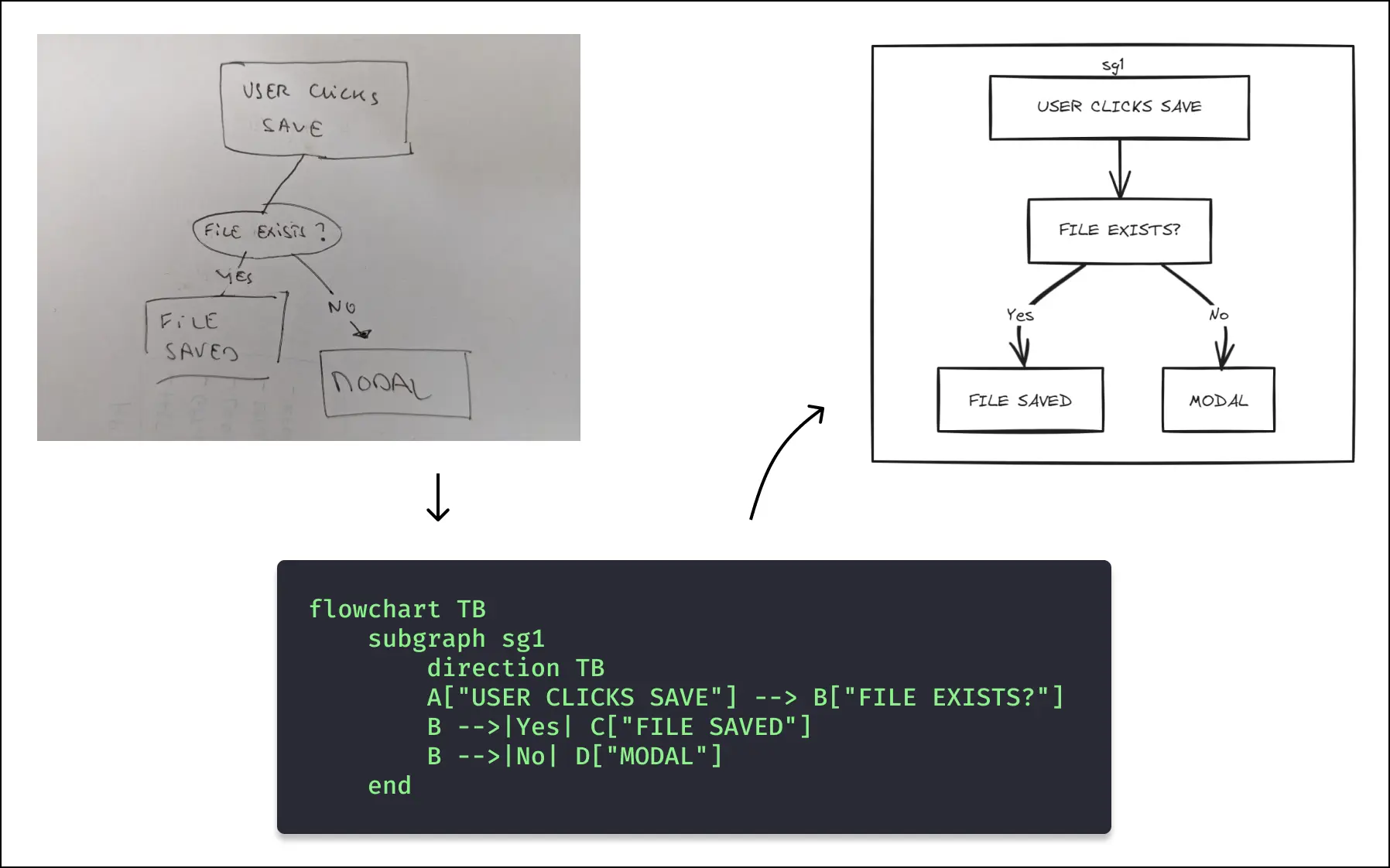

Sample 1

This one was close to reality, even if it was pretty easy. Still, we'll notice that circles are now squares, and the arrow is only unidirectional. I like how it correctly labeled the arrow, which is much better than the input.

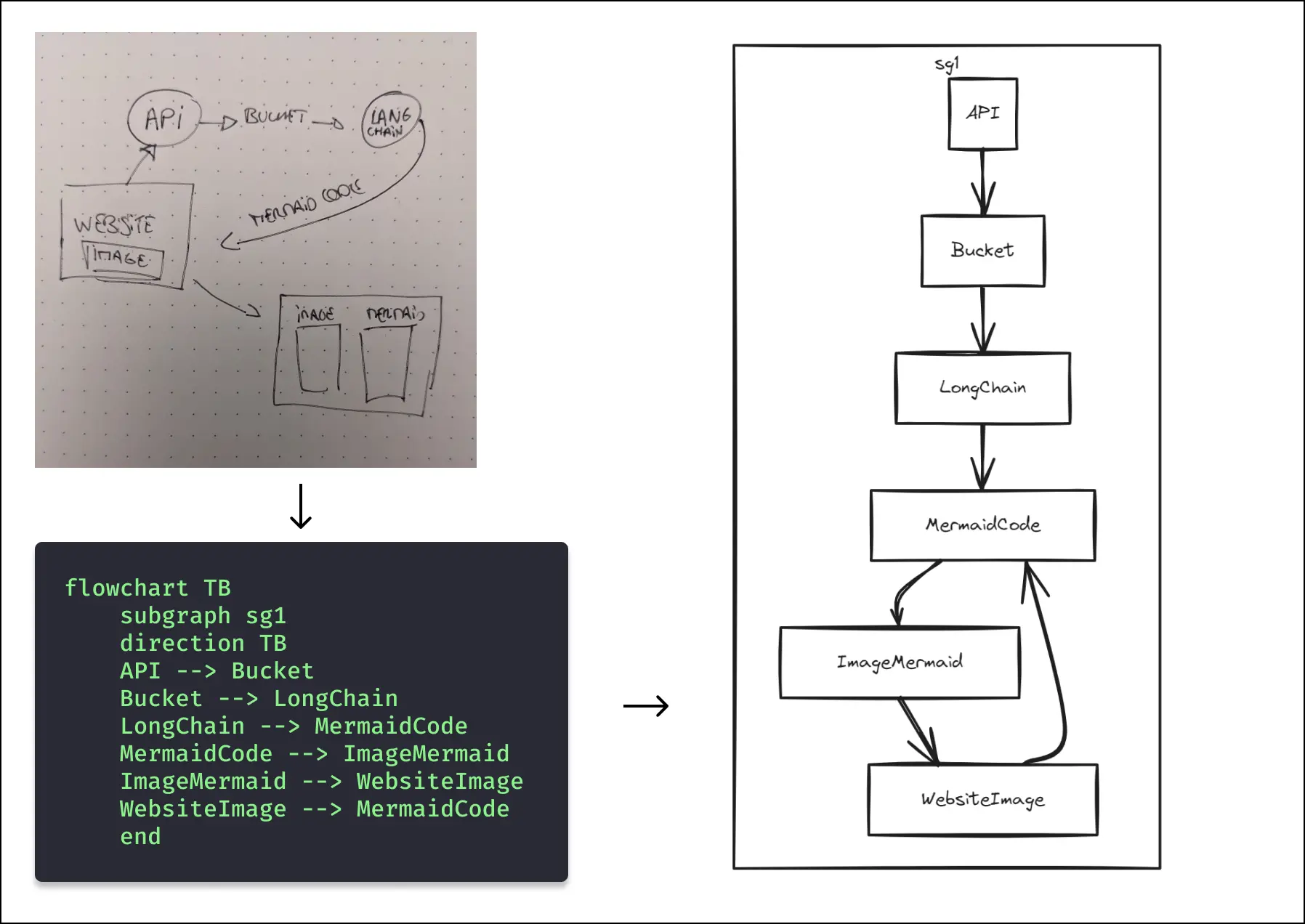

Sample 2

Here, the sample is a rapid sketch I drew when brainstorming how to implement the idea. I didn't know I was about to stress-test my implementation, courtesy of my handwriting. The model nearly got the text right but failed to represent the flowchart nicely enough. The input is confusing and hard to decipher, even for a human. Fair enough, then.

Sample 3

Rock star! It got it right. Interestingly enough, and yet again, an an example that the outputs aren't reliable: When sending the same inputs multiple times, sometimes the model doesn't manage to get the word 'Modal' right, and sometimes it does.

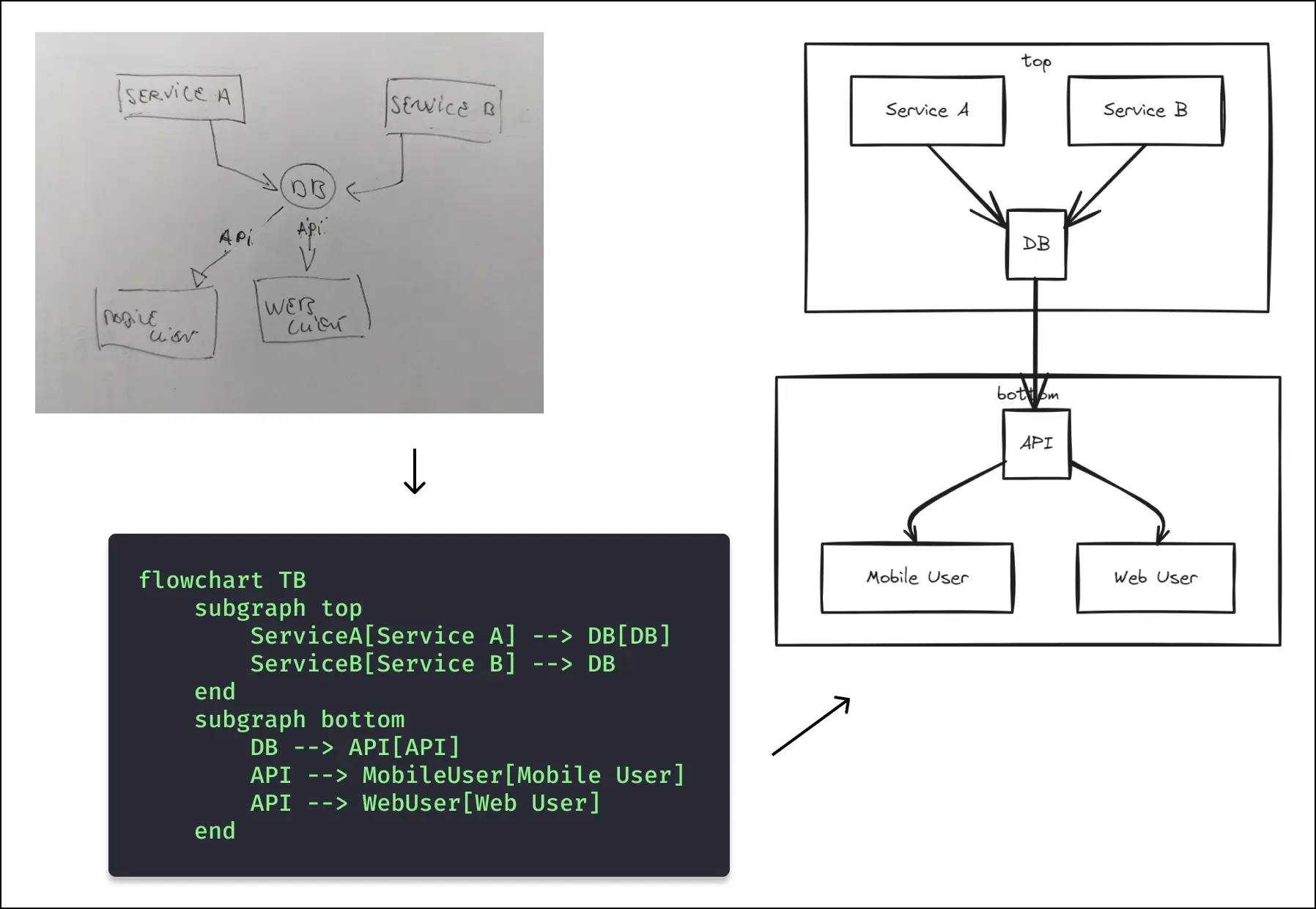

Sample 4

In this case, the model took the liberty of grouping some elements together. It's probably not incorrect. 'API' can sit in its own node in this case. Also, the handwriting is so bad that the model tried its best to come up with something logical (something the prompt requests). Instead of 'mobile client', it went with 'mobile user'. That's not what I meant, but it can be easily fixed. It also added 'top' and 'bottom' sections, probably trying to give a title to each subgraph.

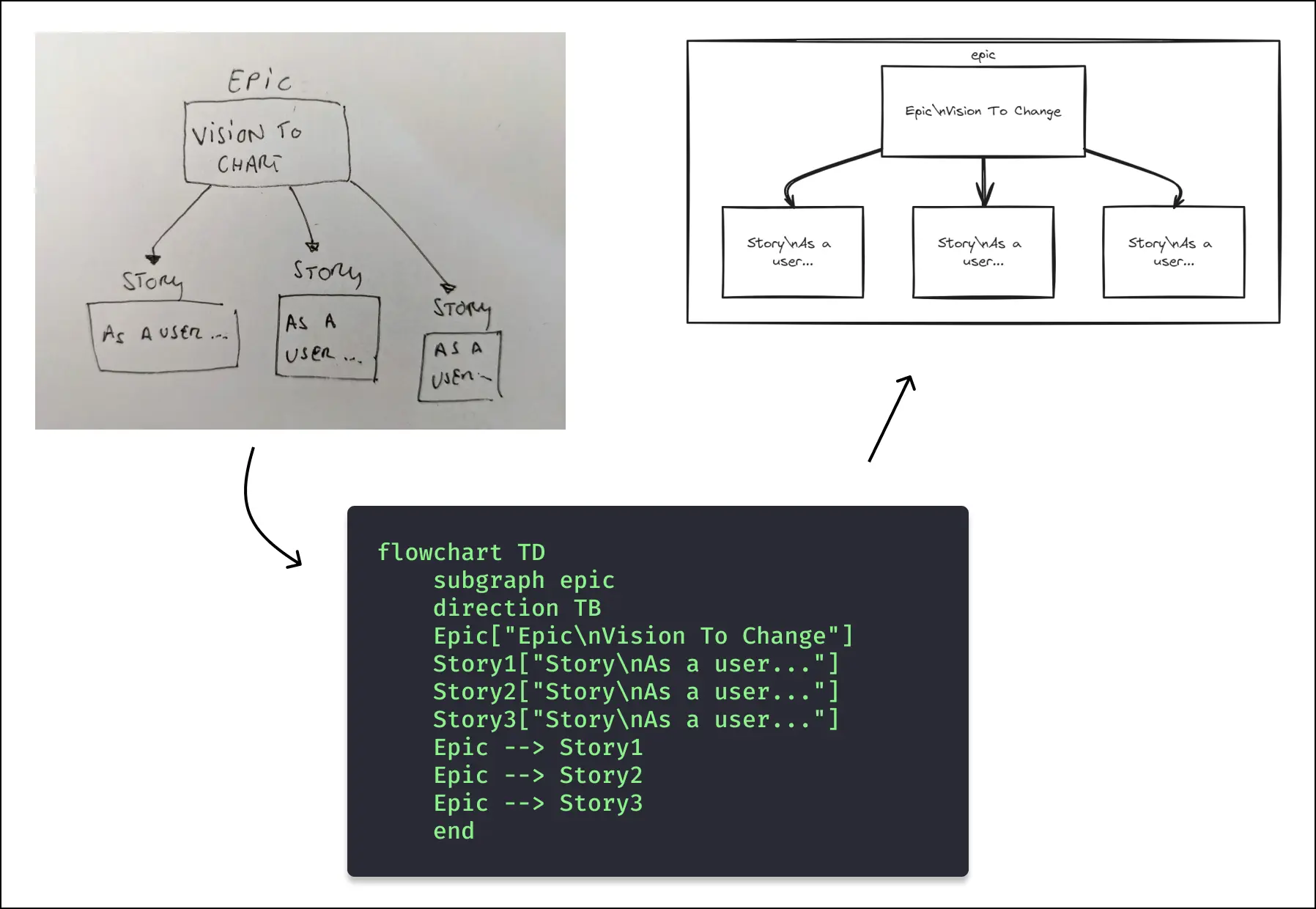

Sample 5

In this example, we can see a bug with line breaks in the current version. I need to fine-tune how the output is parsed so that it's passed to the Excalidraw package better. Maybe it should be passed as a single line or properly handled using mermaid line break features.

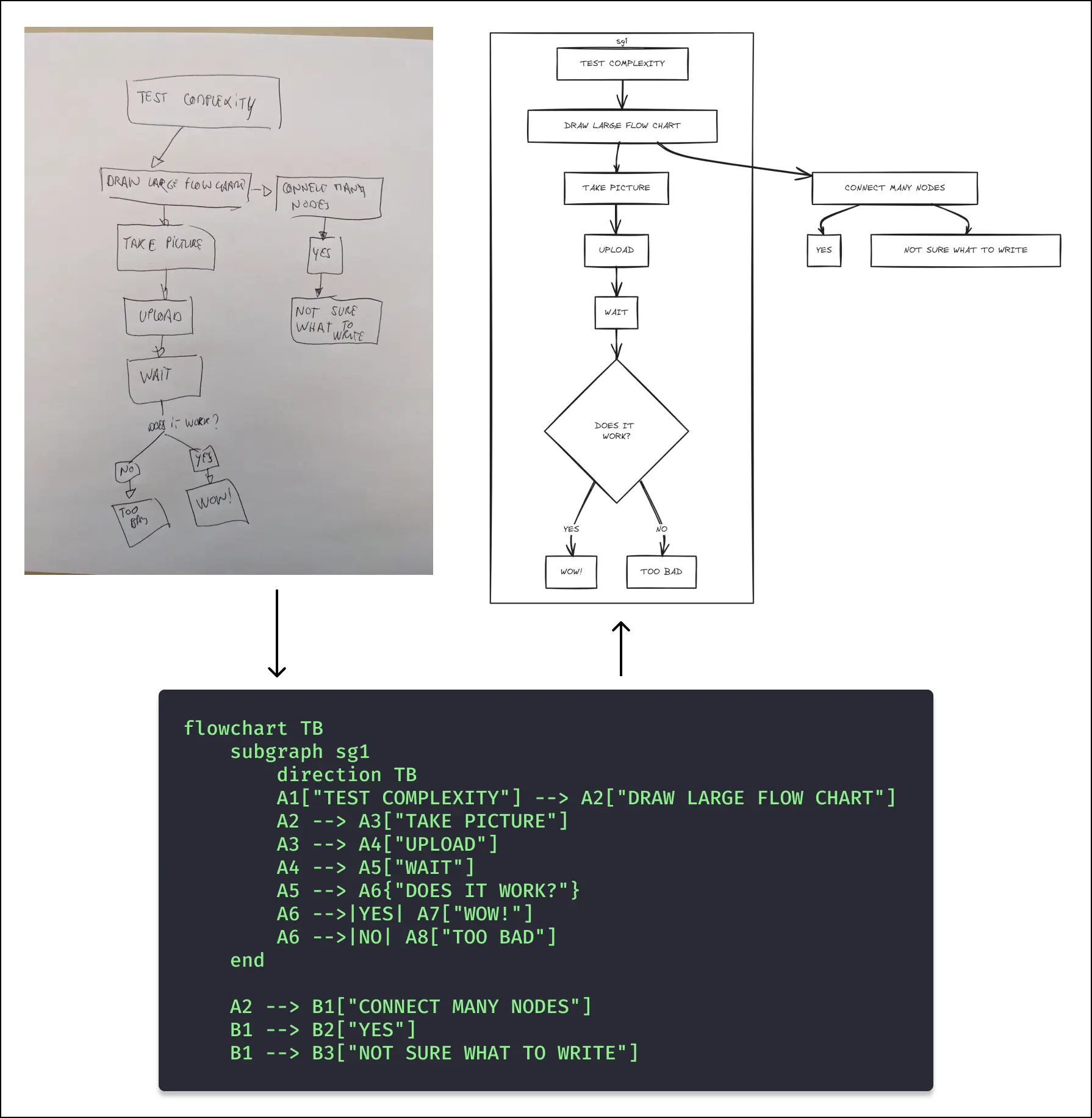

Sample 6

This one took more than 15 seconds to generate, and I was surprised that it even worked! It's nearly correct, and I actually prefer the generated layout better than my input!

Limitations and trade-offs

Since this app is based on 3 tools with specific behaviors and limitations, there are many reasons for altered outputs or failure in addition to the usual issues one could face when building a web app.

gpt-4-turbo's outputs can be wrong in surprising ways and can (mostly will) be different for the same input.- mermaid-to-excalidraw only supports Flowcharts. Anything else will not work, so it was essential to make it clear in the prompt.

- Large or hard-to-read flowcharts will just fail, either by hitting

the max token limit or by confusing

gpt-4-turbotoo much. - You can surely get similar results using ChatGPT to parse input images to mermaid code and then use Excalidraw's feature to generate charts from mermaid code (available in the Editor's AI section). Tough life to be a thin Open AI client!

As often with LLMs, the plain and straightforward use case works well, but the real work lies outside the happy path.

In terms of performance on the deployed app, generating a flowchart takes an average of 10 seconds. It can take longer, rarely less. This was measured by timing the samples of this post; the more complex the submitted picture, the more time is required. It's probably faster to reproduce simple flowcharts from scratch on Excalidraw, but it's about the perceived value, isn't it?

What's next?

Since this is a side project, it wasn't even meant to be shipped (side projects are meant to gather dust in a private repo, aren't they?). That said, if I go for another pass, I have a few more features in mind:

- More straightforward mobile-to-desktop flow: While creating this POC, I've been taking pictures with my phone, sending them to my desktop computer, and uploading them via my browser. UX award my way, please.

- Mermaid code customization to alter the Excalidraw output: There are many reasons for the process to fail or output a flowchart that doesn't match the input. The ability to fine-tune the output and correct small mistakes will make the tool more helpful, albeit less magical.

- Better input pre-processing: The input is critical for the consistency and relevancy of AI models' results. Currently, the app does zero pre-processing before sending the image to OpenAI. This is costly and can lead to many errors (for instance, the model can't cope with upside-down photos).

- Support more input types The initial intention was to support hand-drawn charts, but uploading existing charts works, too. This is more of a byproduct, and proper support would require a bit more work, prompt engineering, and far more testing!

Conclusion

Building this was fun, but it has again proven how difficult it is to obtain reliable and relevant results from an LLM, especially when inputs are more complex than a few lines of text. Getting to a decent wow effect is pretty straightforward, but closing the gap to obtain high-quality outputs is a long road that should not be underestimated. However, the tool could become valuable enough with more work, particularly with the feature allowing users to modify the generated mermaid code.